I sent my only "good" fastcap demo to speedcapture.com about a week ago, and it finally got added to the site over the last couple days.

cpmctf4(00-10-488)-cpm(1,Kitteh).dm_68

Still needs quite a bit of work, but it's a good run considering my skill level. It made me especially happy because I had beaten Alien's time, even though I know he can do better, and has proven it by posting a demo on the mDd forums. I used my own path, though, which makes it even better.

I plan on both improving this run and setting some more times, but until then, I'll be working on dfwc01-6 in VQ3 :)

Monday, 31 October 2011

"Hi-res" rocket now works.

Thanks to the bare minimum help from Morbus, I got the rocket working. What I did was, I imported the .md3 to Milkshape 3D, exported as .3ds, imported that into 3DSMax, used MeshSmooth on all the objects, exported as .3ds, imported that into NPherno's GL Viewer, fixed the texture paths (they were broken by Max), exported as .md3, imported that into Blender, re-aligned the objects (they were shifted 90 degrees, also by Max), then finally exported as .md3.

Now that I've written down all the steps, it seems apparent that there are a few that were quite unnecessary, but seemed appropriate at the time. The bottom line is that I got it all working, with only a little bit of help from other people (Shio taught me how to use Max and also gave me the Blender md3 plugin, Morbus reminded me that NPherno's GL Viewer kicked ass, and another friend just gave a suggestion or two).

Here is a gif to compare the "improved" model with the original:

Now that I've written down all the steps, it seems apparent that there are a few that were quite unnecessary, but seemed appropriate at the time. The bottom line is that I got it all working, with only a little bit of help from other people (Shio taught me how to use Max and also gave me the Blender md3 plugin, Morbus reminded me that NPherno's GL Viewer kicked ass, and another friend just gave a suggestion or two).

Here is a gif to compare the "improved" model with the original:

Saturday, 29 October 2011

Doing some modeling.

So I was talking to Shio about something (it doesn't matter what it was, because we got completely off-track anyway), and after about 10 minutes, he ended up getting on Teamviewer to help teach me how to make stuff in 3DSMax. It worked, somewhat, and I imported the Quake 3 rocket, to try and smoothen it and make it sexier (more polygons = more qualities). It worked, but then came the complications, and the incredible anguish.

There was no way (that I knew of at the time) to convert the file properly from format to format. I had opened the .md3 in Milkshape 3D, then exported as .3ds, opened that in 'Max, did my changes, exported as .ase (I tried a number of formats, but I didn't have any md3 plugin, because they were meant for an older version that I didn't want to install), converted that to .md3 with q3data.exe, and load that in Q3. It loaded, but the shaders were broken and the model was misaligned.

We tried dozens of fixes, from downloading plugins and whole other programs, to opening the .ase in a text editor and changing all the paths by hand there. Nothing worked. Eventually, he had to go to bed, so I gave up as well after one last attempt. I'm going to ask around and see if anyone knows what to do, because I know this is possible. If anyone can get this working successfully, I will be very happy. It will mean that not only rockets, but other models, can be made much higher quality. The problem with all this is that, because of the very awkward way Quake 3 animations work, there's not really any way to increase the poly count on any models that have animations, such as player models. The people who made Chili Quake 3 hi-res'd the headmodel for each one, but that was as far as they could get without breaking the game.

Also, because of the way Quake 3 animations work, there's no way to redo the animations (at least, it doesn't seem reasonable to do manually, and it can't be automated. It's on the same level as writing a 1000-frame demo by hand), because they're "vertex animations". Each vertex has an animation, rather than a skeleton. This means that more polys = more lines of animation to do. With a model that has 8 polys, it isn't that big a deal, but when you go into the thousands, it just gets impossible.

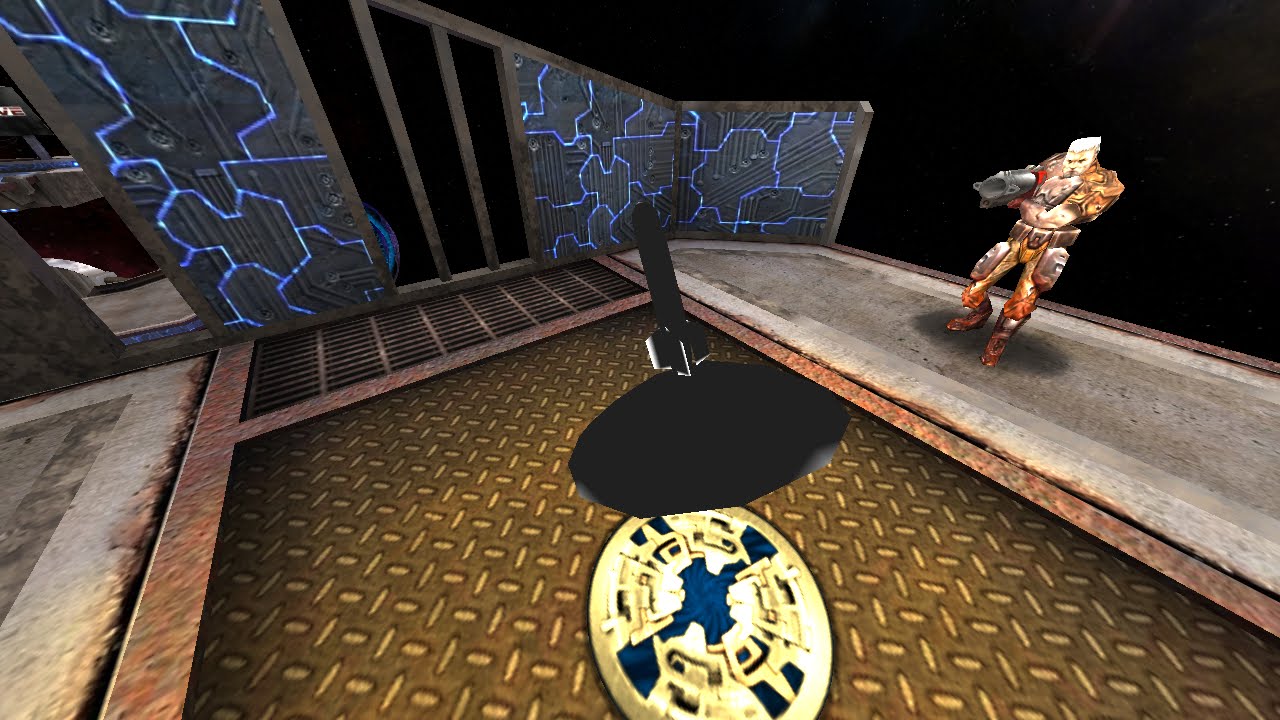

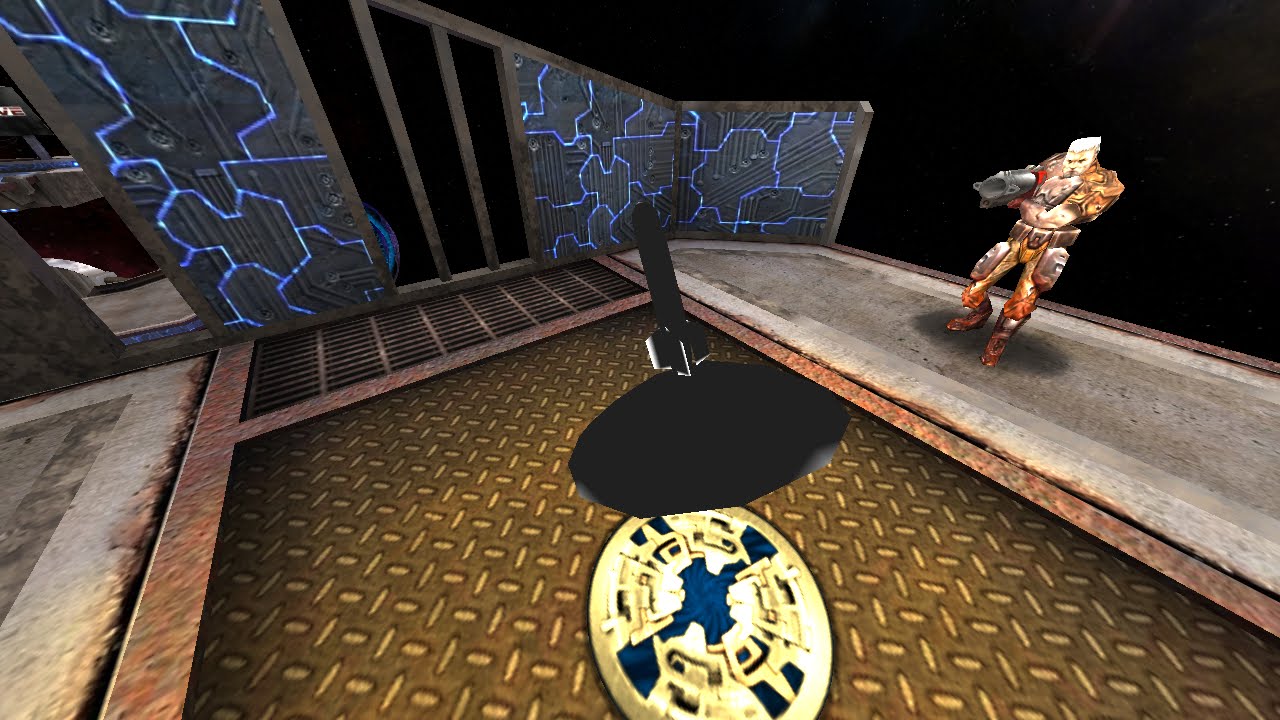

So, to finish off this post, I'm going to post a little image.

There was no way (that I knew of at the time) to convert the file properly from format to format. I had opened the .md3 in Milkshape 3D, then exported as .3ds, opened that in 'Max, did my changes, exported as .ase (I tried a number of formats, but I didn't have any md3 plugin, because they were meant for an older version that I didn't want to install), converted that to .md3 with q3data.exe, and load that in Q3. It loaded, but the shaders were broken and the model was misaligned.

We tried dozens of fixes, from downloading plugins and whole other programs, to opening the .ase in a text editor and changing all the paths by hand there. Nothing worked. Eventually, he had to go to bed, so I gave up as well after one last attempt. I'm going to ask around and see if anyone knows what to do, because I know this is possible. If anyone can get this working successfully, I will be very happy. It will mean that not only rockets, but other models, can be made much higher quality. The problem with all this is that, because of the very awkward way Quake 3 animations work, there's not really any way to increase the poly count on any models that have animations, such as player models. The people who made Chili Quake 3 hi-res'd the headmodel for each one, but that was as far as they could get without breaking the game.

Also, because of the way Quake 3 animations work, there's no way to redo the animations (at least, it doesn't seem reasonable to do manually, and it can't be automated. It's on the same level as writing a 1000-frame demo by hand), because they're "vertex animations". Each vertex has an animation, rather than a skeleton. This means that more polys = more lines of animation to do. With a model that has 8 polys, it isn't that big a deal, but when you go into the thousands, it just gets impossible.

So, to finish off this post, I'm going to post a little image.

Friday, 28 October 2011

Adding brushes to maps (cont.)

I finished the map, and to my surprise, everything turned out exactly as planned.

There is a .pk3 available that has both demos and both .bsps, if anyone cares. It's about 85kb. The lighting is actually pretty cool with these compiler settings, so I might keep them in case I want them again. I'm pretty sure the main thing was -dark. I've wanted to compare these kinds of settings to figure out how they look, but that's for another day.

You can download the pk3 HERE

The video:

There is a .pk3 available that has both demos and both .bsps, if anyone cares. It's about 85kb. The lighting is actually pretty cool with these compiler settings, so I might keep them in case I want them again. I'm pretty sure the main thing was -dark. I've wanted to compare these kinds of settings to figure out how they look, but that's for another day.

You can download the pk3 HERE

The video:

Adding brushes to maps

This is an idea that I've played around with only a few times over the past couple of years.

Basically, I decompile a .bsp (unless I have the .map, which is very rare), and add some brushes. It doesn't matter what they are, but it would be something like text, to substitute tracking in another program. Of course, the workability of this is very slim, because it's rare that the .map is available, and decompiling a .bsp is very buggy: Texture co-ordinates, lights, and a number of other things are lost, which makes cleaning up the .map not really worth it. There are cases where the .map, along with the compiling settings (!!!!) are available, though, and in these cases, it's very worth it to at least try this.

I'm going to figure out just how doable this is. I made an empty box, recorded a demo of me walking around in it, then added some "TEXT" (I made a few brushes and shaped them so they spelled out the word "TEXT"), and recompiled. However, I decided to play around with the compiler settings, and NOT using -fast on the light stage is just ridiculous. This map has a total of 16 brushes and one entity, but the light stage has been processing for at least ten minutes.

The demo wasn't really what I wanted, and I recorded it on the map without the text, so I'm re-recording it with the one that has text, then duplicating the demo and changing the mapname so I have one demo for each .bsp, which is a waste of time but if I were to give this to someone else, it would be necessary.

I'm curious to see how this works out, though I don't expect it to be any different from what I expect. This room only has one vis leaf, and it hasn't been retextured or anything, so it doesn't really matter much how it works out. It would be much more fruitful if I retextured a map with actual structure and multiple vis leafs, for realistic testing. I'm not nearly skilled enough to do that, however, so it's going to happen my way.

Basically, I decompile a .bsp (unless I have the .map, which is very rare), and add some brushes. It doesn't matter what they are, but it would be something like text, to substitute tracking in another program. Of course, the workability of this is very slim, because it's rare that the .map is available, and decompiling a .bsp is very buggy: Texture co-ordinates, lights, and a number of other things are lost, which makes cleaning up the .map not really worth it. There are cases where the .map, along with the compiling settings (!!!!) are available, though, and in these cases, it's very worth it to at least try this.

I'm going to figure out just how doable this is. I made an empty box, recorded a demo of me walking around in it, then added some "TEXT" (I made a few brushes and shaped them so they spelled out the word "TEXT"), and recompiled. However, I decided to play around with the compiler settings, and NOT using -fast on the light stage is just ridiculous. This map has a total of 16 brushes and one entity, but the light stage has been processing for at least ten minutes.

The demo wasn't really what I wanted, and I recorded it on the map without the text, so I'm re-recording it with the one that has text, then duplicating the demo and changing the mapname so I have one demo for each .bsp, which is a waste of time but if I were to give this to someone else, it would be necessary.

I'm curious to see how this works out, though I don't expect it to be any different from what I expect. This room only has one vis leaf, and it hasn't been retextured or anything, so it doesn't really matter much how it works out. It would be much more fruitful if I retextured a map with actual structure and multiple vis leafs, for realistic testing. I'm not nearly skilled enough to do that, however, so it's going to happen my way.

Mapping with oranje (cont.)

I've been doing my best to map my ideas, and so far it's been going pretty good. I've made a pack with about 6 bsps, but a lot of them are single rooms that are compiled together in one of the other bsps (for example, I have room3.bsp, and teamrun4.bsp, but room3.bsp is just the third room in teamrun4.bsp, with a couple different aspects).

I got loveless to help me with some testing, and it didn't go too well, but it gave me a pretty good idea to fix one of the tricks I had been thinking of for a while. I can't test it properly with only one person, so I have to wait until oranje is available, which will probably be this evening.

I also need to get in touch with Shio, and fix these msn problems so I can talk to kiddy, because I need some help, both with testing, and with mapping. Shio is probably the best mapper DeFRaG has, and he's made some incredibly advanced mechanics, plus he's willing to help. With the right people on board, this map could be finished rather quickly, at least at a fundamental level. I don't expect this map to be as advanced and crazy as thirdperson, but definitely up there with regards to 2-player maps.

A nice idea for a team map is one that all takes place in a relatively small area, as opposed to many different, completely detached, rooms. Inside one room, A has to get to X, and B to Y, but A needs the help of B to get to X, and B needs the help of A at X to get to Y. This is a basic scenario, and is pretty much what all team maps are based off of, but if this was done a number of times in the same room, it would be quite impressive. I have some pictures in my head of what it could look like, but the main problem with these types of maps is that they're usually so easy to abuse and exploit, which is why making one that works properly is such a feat.

I might start working on one in between my other map(s). And who knows, they might all become one map in the end!

I got loveless to help me with some testing, and it didn't go too well, but it gave me a pretty good idea to fix one of the tricks I had been thinking of for a while. I can't test it properly with only one person, so I have to wait until oranje is available, which will probably be this evening.

I also need to get in touch with Shio, and fix these msn problems so I can talk to kiddy, because I need some help, both with testing, and with mapping. Shio is probably the best mapper DeFRaG has, and he's made some incredibly advanced mechanics, plus he's willing to help. With the right people on board, this map could be finished rather quickly, at least at a fundamental level. I don't expect this map to be as advanced and crazy as thirdperson, but definitely up there with regards to 2-player maps.

A nice idea for a team map is one that all takes place in a relatively small area, as opposed to many different, completely detached, rooms. Inside one room, A has to get to X, and B to Y, but A needs the help of B to get to X, and B needs the help of A at X to get to Y. This is a basic scenario, and is pretty much what all team maps are based off of, but if this was done a number of times in the same room, it would be quite impressive. I have some pictures in my head of what it could look like, but the main problem with these types of maps is that they're usually so easy to abuse and exploit, which is why making one that works properly is such a feat.

I might start working on one in between my other map(s). And who knows, they might all become one map in the end!

Wednesday, 26 October 2011

Mapping with oranje

Over the past few days, I've been making DeFRaG maps with oranje. We beta test them on his server, discuss bugs, changes, improvements, etc., then apply the changes immediately. We've had a few ideas, and made most of them into maps on the same day.

Our main project is a 2-player CPM run, but we only have one room completed and vq3-proofed. We've been making a lot of small, one-room maps meant to test ideas and practice mapping, which is working quite well.

While we make these maps, he's also working on his own 2-player VQ3 map, which we also test.

Our ideas are pretty sweet, but a little too advanced for us (mostly me, but some of the things are also way out of his skill range), so I'm waiting to discuss these maps with Shio and get him to help us out a bit.

I don't expect our maps to be finished even before the end of the year, since, like all amazingly modest people, we are never satisfied with our work.

Our main project is a 2-player CPM run, but we only have one room completed and vq3-proofed. We've been making a lot of small, one-room maps meant to test ideas and practice mapping, which is working quite well.

While we make these maps, he's also working on his own 2-player VQ3 map, which we also test.

Our ideas are pretty sweet, but a little too advanced for us (mostly me, but some of the things are also way out of his skill range), so I'm waiting to discuss these maps with Shio and get him to help us out a bit.

I don't expect our maps to be finished even before the end of the year, since, like all amazingly modest people, we are never satisfied with our work.

Saturday, 22 October 2011

Moving these cams to Wolfcam once and for all (cont.)

At first, I did a simple camera with two points, and that worked perfectly, with no problems. Wolfcam .cam8 files look incredibly complicated, but you only need to pay attention to a total of 4 lines per point, and once you realize that, it becomes really simple. Of course, this only applies to basic cams, and when you start going crazy with them, every line becomes important.

Anyway, after I made the two-point cam work perfectly, I tried the only other one that I kept, and it failed. It was an utter failure, with no hint of success or anything. The only thing I learned was that, by default, Wolfcam doesn't use as smooth of a curve to interpolate between points as CT3D. I'm not sure how to address this yet, but it definitely needs attention if I'm going to do this again. I'll look at some cvars and try to do some configging, but god damn, this is an ugly video.

Here is the result:

I added the origin info to the video because I knew the result would suck, and I wanted to compare each frame from both outputs, to see if anything changed other than the Z origin.

Anyway, after I made the two-point cam work perfectly, I tried the only other one that I kept, and it failed. It was an utter failure, with no hint of success or anything. The only thing I learned was that, by default, Wolfcam doesn't use as smooth of a curve to interpolate between points as CT3D. I'm not sure how to address this yet, but it definitely needs attention if I'm going to do this again. I'll look at some cvars and try to do some configging, but god damn, this is an ugly video.

Here is the result:

I added the origin info to the video because I knew the result would suck, and I wanted to compare each frame from both outputs, to see if anything changed other than the Z origin.

Moving these cams to Wolfcam once and for all.

This seems like a pretty good way to convert CT3D cameras into Wolfcam's weird format, the only problem is that Wolfcam's format and playback method is pretty shit. If it gets fixed, however, then this would work wonders.

Basically, CT3D and Wolfcam both use camera points, except when a path is "created" in CT3D, it creates a .cfg with hundreds of points, because there is no interpolation or anything in-between points: it jumps from A to B to C. Because of this, it has to have the position for every single frame. But inside CT3D, it uses camera points and interpolates between them, which means that I can just take the co-ordinates from there and add them to a Wolfcam path. The saved paths from Wolfcam are really, really complicated and full of crap, though, so I'll have to set all the points in-game, and save it from there.

A few problems arise, because the control is drastically changed in-between programs, and I can't really do much from inside Wolfcam; only add the points and hope it works, but in CT3D, I can modify the values instantly, change angles, change the behavior of everything, add points, and do other awesome shit that can't be done in Wolfcam.

Still, I'm going to try it, because there are other benefits of doing everything inside Wolfcam, mainly being able to see everything in real-time, along with whatever demo I'm using.

Basically, CT3D and Wolfcam both use camera points, except when a path is "created" in CT3D, it creates a .cfg with hundreds of points, because there is no interpolation or anything in-between points: it jumps from A to B to C. Because of this, it has to have the position for every single frame. But inside CT3D, it uses camera points and interpolates between them, which means that I can just take the co-ordinates from there and add them to a Wolfcam path. The saved paths from Wolfcam are really, really complicated and full of crap, though, so I'll have to set all the points in-game, and save it from there.

A few problems arise, because the control is drastically changed in-between programs, and I can't really do much from inside Wolfcam; only add the points and hope it works, but in CT3D, I can modify the values instantly, change angles, change the behavior of everything, add points, and do other awesome shit that can't be done in Wolfcam.

Still, I'm going to try it, because there are other benefits of doing everything inside Wolfcam, mainly being able to see everything in real-time, along with whatever demo I'm using.

Friday, 21 October 2011

I finally got a demo.

Korfi sent me a demo today, and while it's not exactly what I wanted, it should work for what I want. I'm still trying to figure out how exactly I'm going to go about doing this thing, but at least now I'm ready to do something, so I have no more excuses when I just sit on my ass and do nothing.

I just don't really feel like doing this right now, but there's nothing else to do :(

I just don't really feel like doing this right now, but there's nothing else to do :(

Tuesday, 18 October 2011

Motivation.

I've lost all motivation to do anything. I'm just sitting here in my chair, with a blanket, trying to get comfortable so I can sleep. Life is pretty shit right about now, and has been for about 3 damn years.

Monday, 17 October 2011

Problems with trans-map experiment thing.

I ran into a problem. I recompiled the maps fine, and they're as ugly as I expected them to be, and the demo was recorded fine. It was a timescaled demo in CPM, but it works for now.

The problem is with lining up the demos. I'm not sure how exactly to go about doing it. I have the maps side-by-side, but obviously, if I play the demo back, it won't be aligned properly on the maps. Especially DM18, because I turned it 180 degrees. I'm not even sure if I have to line up the demo with the map, and have 3 different demos, or just make a cam based on the original map, and offset THAT, or what. But I do know that as soon as things get mildly complicated, my brain just ceases functioning.

Offsetting the demo itself is stupid without scripts (that I don't have), because there are thousands of frames that I would have to modify. That's already out of the question.

Offsetting the camera will be "fine", because I can open Radiant and look at how many units the maps are offset by vs. default, then just move the camera path over that much. What becomes a huge problem is DM18, because, as I said earlier, I turned it 180 degrees due to it not being easy to work with as-is. I wasn't thinking as far ahead as I should have been, because I could have just put it on the other side of the map and it would have been fine, since all the other maps are symmetrical (skyward, longestyard, spacectf, spacechamber).

I'll start working on a cam in CT3D with my custom bsp, but it will be pretty annoying because I don't have my little script that parses the origin and viewangles of the player for every frame, so I'll have to do a lot of testing and guessing, and the path won't be that great. On the plus side, though, this will probably be done in Defrag, which is what the scripts are actually meant for, as opposed to Wolfcam, which requires some modding of the scripts CT3D outputs.

I might just follow the rocket from a simple, static, Z-origin, and then move X/Y to follow the rocket properly. This is so I can make sure everything is actually working, then I can make the real cam and do all the offsets and shit.

This is killing me :(

The problem is with lining up the demos. I'm not sure how exactly to go about doing it. I have the maps side-by-side, but obviously, if I play the demo back, it won't be aligned properly on the maps. Especially DM18, because I turned it 180 degrees. I'm not even sure if I have to line up the demo with the map, and have 3 different demos, or just make a cam based on the original map, and offset THAT, or what. But I do know that as soon as things get mildly complicated, my brain just ceases functioning.

Offsetting the demo itself is stupid without scripts (that I don't have), because there are thousands of frames that I would have to modify. That's already out of the question.

Offsetting the camera will be "fine", because I can open Radiant and look at how many units the maps are offset by vs. default, then just move the camera path over that much. What becomes a huge problem is DM18, because, as I said earlier, I turned it 180 degrees due to it not being easy to work with as-is. I wasn't thinking as far ahead as I should have been, because I could have just put it on the other side of the map and it would have been fine, since all the other maps are symmetrical (skyward, longestyard, spacectf, spacechamber).

I'll start working on a cam in CT3D with my custom bsp, but it will be pretty annoying because I don't have my little script that parses the origin and viewangles of the player for every frame, so I'll have to do a lot of testing and guessing, and the path won't be that great. On the plus side, though, this will probably be done in Defrag, which is what the scripts are actually meant for, as opposed to Wolfcam, which requires some modding of the scripts CT3D outputs.

I might just follow the rocket from a simple, static, Z-origin, and then move X/Y to follow the rocket properly. This is so I can make sure everything is actually working, then I can make the real cam and do all the offsets and shit.

This is killing me :(

Sunday, 16 October 2011

I'm going to do a short edit.

I'm going to be making a video over the next couple days. It won't be any weird meta editing in-game, or just showing off some weird fix I did, or anything like that. I'll actually be doing some editing. I have some ideas, so I'm going to use the best content I have currently, which is pretty shit. I might even stage some frags if I really need them, but I won't try to hide it: I'll make sure it's known which ones are real and which ones are fake.

Should be done by the end of the week, most likely earlier.

Should be done by the end of the week, most likely earlier.

University convention thing.

Today, I went to the Ontario University Fair to meet some friends. I don't really care about university, but I wanted to see these people so it was all worth it. I got there at 10:15 and sat in a corner, waiting for the other people to arrive until almost 12:30. I hate crowds, and I hate strangers, and that's all this was: hundreds upon hundreds of people I had never seen before, walking around and talking and stuff. I literally almost threw up, at least twice.

Anyway, my friends finally arrived, and we walked around for a couple hours, looking at universities and shit. It was boring as hell, and not very eventful, but worth it entirely.

Why I'm writing this here, I don't really know. I could just as easily talk to one of the many (lol 22 on Facebook and 18 on MSN) people I know, but to quote cityy: "there are just barely any people from which I feel like they have something interesting to say". Basically, people suck, and talking to them is pointless. Most of the time.

Anyway, my friends finally arrived, and we walked around for a couple hours, looking at universities and shit. It was boring as hell, and not very eventful, but worth it entirely.

Why I'm writing this here, I don't really know. I could just as easily talk to one of the many (lol 22 on Facebook and 18 on MSN) people I know, but to quote cityy: "there are just barely any people from which I feel like they have something interesting to say". Basically, people suck, and talking to them is pointless. Most of the time.

Saturday, 15 October 2011

New mouse!

My brother stole my mouse last night, along with the power cord for my computer, and he unplugged my monitor, probably in hopes to steal that cord as well.

This forced me to go to the store and buy a new mouse. I didn't have much in the way of options, because I only had $16, and it was too cold for me to go more than a few blocks away from my house. I went to the computer store down the street, and literally the only mouse they had that wasn't complete shit was the Microsoft Comfort Optical Mouse 3000, so I bought it. The tag said $17.99, but the guy let me buy it for $16 because he's cool. I later realized that other places would have sold it to me for $20, so I saved a bit of money, which is nice.

I got home and plugged everything (back) in, booted up, and installed the drivers from the CD, because I don't ever want to try and get drivers from the internets again.

My first thought was that it felt really awkward. I'm used to one specific shape, having used it for every computer I've ever had, and this is a more modern-ish shape, with contours and everything. It feels fine, just not what I'm used to. Only after this, I noticed the side button. I freaked out. I've needed a mouse4 ever since I connected to the internet, because I've been using ctrl as my push-to-talk in programs like Ventrilo, Teamspeak and Mumble, and it gets really annoying for me and the people listening when I press my PTT all the time while browsing the web etc.

I went into the mouse settings, and it let me turn on/off side scrolling, change speed, turn on/off scroll accel (which is a really neat feature), and program each button. I can do almost anything with all four buttons on the mouse, which is really sweet. The only problem I had was that there was no "mouse4" in the drop-down menu for each button. There was left click, right click, and middle click. It wasn't mouse1-4, and there was no "side click", so unless I'm blind, this button has to be bound to some weird macro if I want it to work. Strangely, in the macro editor, there is a mouse4 setting, but the way the macros work is pretty stupid, and I can't just hold down the button. I have to either toggle it, or have it activate for a specific amount of time, from 10ms to ????ms. I went with toggle.

The mouse itself has a few annoyances as well. Because there's a side scroll, mouse3 is significantly harder to click, because there's too much emphasis on the side scrolling. The wheel also feels several mm higher than my old mouse, which is really awkward. But all of these pale in comparison to the next problem: there's a guard around the wheel, which (obviously) isn't part of mouse1 or mouse2. What I like to do sometimes, is rest my index finger on the wheel and press mouse1 from that position. I can't do that now, because of the stupid guard. This is by far the worst part of the whole mouse, and really frustrates me. I can't really change mice, though, because I'm poor and my old mouse was broken anyway.

There are other problems, like accel, which can't be modified as far as I can see. I can't toggle it, and I can't scale it, so I have to use a lower sensitivity and treat it more like a middle-high sens, which is actually perfect in Quake 3, but not so great elsewhere. I can finally use my "perfect" pitch/yaw values of 0.0054931640625 (see if you can figure out why I think this number is so awesome :D), because of the accel and 1000dpi, which is just a little bit higher than my previous 800dpi, but enough of an increase to allow me to use this sensitivity without any problems. It will just take some getting used to.

Something else, however, that I don't want to get used to, is that the speed doesn't "scale" for different resolutions. If I play starcraft (without a widescreen mod, because I hate those, and they're cheating), it runs at 640x480 resolution. Now, my desktop is at 1600x1200, which is substantially larger. What should happen is, if I have to move my mouse 10cm across my mousepad to go from the left side of my desktop to the right side (assuming I have no accel; this is all hypothetical), I should have to move it 10cm to get from the left side of the screen to the right in fullscreen programs that have a lower resolution than my desktop resolution. Instead, what happens is, it uses the same 1600x1200 pixels as a measurement for the overall sensitivity, and requires the same amount of movement to go from the left side of the screen to the right (in this smaller resolution) as it does to move my cursor 640 pixels from the left of my desktop.

Basically, this means that I have an incredibly high sensitivity in Starcraft, which is something that I can't handle. I found no way to change sensitivity per-application, unless I make a special bind (ON MOUSE4!!) that toggles my sensitivity. I would change the bind when I open Starcraft, then change it back to my PTT when I'm playing, then change it yet again when I'm done so that it doesn't take 5 minutes to do a 360 in Quake, and then change it back to my PTT for the second time. Obviously inefficient, and stupid.

I wish more companies actually let you modify all the built-in bullshit, like sensitivity, smoothing, snapping, etc. because having to deal with all the "enhancements" that come with the mouse makes getting any modern mouse not even an option for someone who actually wants to play games. I don't know how all these modern gamers do it... they are an inferior race, and yet they adapt to these things so well, without arguing or showing any signs of disagreement. It's mind-boggling.

I've read a couple articles, and seen a couple reviews, of mice with actual good drivers and utilities that let you have complete control over your mouse. This is an amazing breakthrough, and it's sad that it can even be considered a breakthrough. If I want this $90 mouse because it has an impressive sensor and a good grip, I shouldn't have to have horrible accel, angle snapping, smoothing, and whatever else these companies can dream up. I should be able to remove them with something as simple as a check box, or even a slider.

----

Anyway, I'm going to try and warm up a little bit so I can get rid of this stupid cold by tomorrow and go to the Convention Center, because I'm going no matter what, and having a cold just makes things suck in general.

This forced me to go to the store and buy a new mouse. I didn't have much in the way of options, because I only had $16, and it was too cold for me to go more than a few blocks away from my house. I went to the computer store down the street, and literally the only mouse they had that wasn't complete shit was the Microsoft Comfort Optical Mouse 3000, so I bought it. The tag said $17.99, but the guy let me buy it for $16 because he's cool. I later realized that other places would have sold it to me for $20, so I saved a bit of money, which is nice.

I got home and plugged everything (back) in, booted up, and installed the drivers from the CD, because I don't ever want to try and get drivers from the internets again.

My first thought was that it felt really awkward. I'm used to one specific shape, having used it for every computer I've ever had, and this is a more modern-ish shape, with contours and everything. It feels fine, just not what I'm used to. Only after this, I noticed the side button. I freaked out. I've needed a mouse4 ever since I connected to the internet, because I've been using ctrl as my push-to-talk in programs like Ventrilo, Teamspeak and Mumble, and it gets really annoying for me and the people listening when I press my PTT all the time while browsing the web etc.

I went into the mouse settings, and it let me turn on/off side scrolling, change speed, turn on/off scroll accel (which is a really neat feature), and program each button. I can do almost anything with all four buttons on the mouse, which is really sweet. The only problem I had was that there was no "mouse4" in the drop-down menu for each button. There was left click, right click, and middle click. It wasn't mouse1-4, and there was no "side click", so unless I'm blind, this button has to be bound to some weird macro if I want it to work. Strangely, in the macro editor, there is a mouse4 setting, but the way the macros work is pretty stupid, and I can't just hold down the button. I have to either toggle it, or have it activate for a specific amount of time, from 10ms to ????ms. I went with toggle.

The mouse itself has a few annoyances as well. Because there's a side scroll, mouse3 is significantly harder to click, because there's too much emphasis on the side scrolling. The wheel also feels several mm higher than my old mouse, which is really awkward. But all of these pale in comparison to the next problem: there's a guard around the wheel, which (obviously) isn't part of mouse1 or mouse2. What I like to do sometimes, is rest my index finger on the wheel and press mouse1 from that position. I can't do that now, because of the stupid guard. This is by far the worst part of the whole mouse, and really frustrates me. I can't really change mice, though, because I'm poor and my old mouse was broken anyway.

There are other problems, like accel, which can't be modified as far as I can see. I can't toggle it, and I can't scale it, so I have to use a lower sensitivity and treat it more like a middle-high sens, which is actually perfect in Quake 3, but not so great elsewhere. I can finally use my "perfect" pitch/yaw values of 0.0054931640625 (see if you can figure out why I think this number is so awesome :D), because of the accel and 1000dpi, which is just a little bit higher than my previous 800dpi, but enough of an increase to allow me to use this sensitivity without any problems. It will just take some getting used to.

Something else, however, that I don't want to get used to, is that the speed doesn't "scale" for different resolutions. If I play starcraft (without a widescreen mod, because I hate those, and they're cheating), it runs at 640x480 resolution. Now, my desktop is at 1600x1200, which is substantially larger. What should happen is, if I have to move my mouse 10cm across my mousepad to go from the left side of my desktop to the right side (assuming I have no accel; this is all hypothetical), I should have to move it 10cm to get from the left side of the screen to the right in fullscreen programs that have a lower resolution than my desktop resolution. Instead, what happens is, it uses the same 1600x1200 pixels as a measurement for the overall sensitivity, and requires the same amount of movement to go from the left side of the screen to the right (in this smaller resolution) as it does to move my cursor 640 pixels from the left of my desktop.

Basically, this means that I have an incredibly high sensitivity in Starcraft, which is something that I can't handle. I found no way to change sensitivity per-application, unless I make a special bind (ON MOUSE4!!) that toggles my sensitivity. I would change the bind when I open Starcraft, then change it back to my PTT when I'm playing, then change it yet again when I'm done so that it doesn't take 5 minutes to do a 360 in Quake, and then change it back to my PTT for the second time. Obviously inefficient, and stupid.

I wish more companies actually let you modify all the built-in bullshit, like sensitivity, smoothing, snapping, etc. because having to deal with all the "enhancements" that come with the mouse makes getting any modern mouse not even an option for someone who actually wants to play games. I don't know how all these modern gamers do it... they are an inferior race, and yet they adapt to these things so well, without arguing or showing any signs of disagreement. It's mind-boggling.

I've read a couple articles, and seen a couple reviews, of mice with actual good drivers and utilities that let you have complete control over your mouse. This is an amazing breakthrough, and it's sad that it can even be considered a breakthrough. If I want this $90 mouse because it has an impressive sensor and a good grip, I shouldn't have to have horrible accel, angle snapping, smoothing, and whatever else these companies can dream up. I should be able to remove them with something as simple as a check box, or even a slider.

----

Anyway, I'm going to try and warm up a little bit so I can get rid of this stupid cold by tomorrow and go to the Convention Center, because I'm going no matter what, and having a cold just makes things suck in general.

Friday, 14 October 2011

Something to do while I wait for ONE demo.

I had an idea that's similar to all the stuff I've been doing recently. I'm not sure how possible it is in QL, but in Q3 it would be pretty easy. I'll run from map to map. It would be amazing if I got it to work properly, but I don't think it will work out.

To describe the idea more clearly: I will be doing a short strafe run on, say ctf4 (because the space maps are the easiest), then rocketjump "into the void", and land on the next map (might me dm18). So this is actually quite a bit more complicated than my last project, which is now on hold. But it's also more doable by me, because I don't need to wait for someone else to send me a demo; I can do it myself.

There are quite a few complications I can think of right off the bat.

First, the obvious one: It will be a huge pain in the ass to move smoothly from map to map. I have a few ideas, but they all have their own problems, and I don't know how reliable each one is.

The first way is to use some sort of savepos command to save my origin and velocity, then change x/y/z when I switch maps. Once again, this would be easy in Q3, but not possible in QL, because all QL has is setviewpos, which only allows x/y/z/y/p/r, without v0,v1,v2, like Q3's placeplayer. It automatically sets my velocity to 400 in the direction I'm facing, just like a teleporter. So that isn't really viable.

My second option is to somehow run on my own custom, flat .bsp, and make sure that my jumps line up with the other maps. I could add some brushes for the major structural pieces (or in some cases, make holes, like on ALL THE MAPS because they're space maps). Then it's simple; I make a single camera path that goes through the "fake" map. I capture JUST the player model and any rockets/etc. that I use, because I expect to use at least some rocketjumps to go from map to map. Then I offset the camera so that all the maps fit beside eachother fine. This seems like it would require decimal-point precision in the camera placement, which means a LOT of testing, and tons of work that I don't really want to do, and is very unnecessary, because there's another step that would also have to be done, which is only slightly less monotonous: I would have to fix the leaf nodes that are stored inside the demo.

Q3MAP2 does this thing where, when compiling a .bsp, it generates little (or large) leafs all over the map. I don't know the specifics, but while inside one of these leafs, only what's inside it can be rendered. Everything outside is completely ignored; it isn't drawn, and it isn't rendered. It helps a lot with rendering efficiency, and probably with compression. I don't really know much about this, because I only took a quick look at hinting in Radiant, and that's all I did to look into the more advanced map stuff. The whole reason I brought this up is because these leafs are saved inside the demo, and the whole world disappears when you leave them. It's quite a predicament, and only matters when you try using bsps that don't belong (playing a demo on a map that it wasn't recorded on). Fixing this is a big problem, and yet another one I'd rather ignore.

The third (and final, as far as I know) way to fix this whole problem of running in-between maps, is to decompile all of the .bsps that I will be using, line them up, compile them, do my run, make the camera using this custom bsp, then offset it for each individual bsp, record only the player + related entities on my custom bsp, then each individual map, and then the sky in my own skybox bsp. This is really the least complicated way to go about this. It has quite a few more major steps than the first two solutions, but each one is a lot less time consuming, a lot more reasonable, and a lot easier.

The custom bsp will be a piece of shit with no lights, tons of misaligned textures, and broken entities/brushes. Decompiling is an imperfect technique, and can never be perfected. The reason being, the light entities themselves are ignored, while the lightmap is saved to the bsp. I don't know how unreasonable it would be to approximate the color, intensity, and position of the lights based on the lightmaps, but it seems like even a very inaccurate approximation would be better than nothing. So it would be stupid for me to use this bsp for anything else, is what I'm getting at.

I pretty much described every annoyance that I've thought might appear in this. There are some things, like chroma keying, that I'm horrible at, but that isn't even significant compared to the other problems I face with this.

The things I do keep getting more and more complicated, and I love it! It's so fun to play around with the game in this way. I don't just get a demo and upload it to youtube anymore. I actually do things now, and this I'm very happy about. I'll start working on this on Saturday, then on Monday, because I'm going to a convention on Sunday. My other trans-map air-rocket project has a higher priority than this one, though, so if someone sends me a demo while I'm in the middle of this, I'm saving my work and moving over to the other one. Unless I'm in the middle of rendering it or something.

That's all for now. I have a thing for writing about what I'm thinking, so these really stretch on for quite a bit.

To describe the idea more clearly: I will be doing a short strafe run on, say ctf4 (because the space maps are the easiest), then rocketjump "into the void", and land on the next map (might me dm18). So this is actually quite a bit more complicated than my last project, which is now on hold. But it's also more doable by me, because I don't need to wait for someone else to send me a demo; I can do it myself.

There are quite a few complications I can think of right off the bat.

First, the obvious one: It will be a huge pain in the ass to move smoothly from map to map. I have a few ideas, but they all have their own problems, and I don't know how reliable each one is.

The first way is to use some sort of savepos command to save my origin and velocity, then change x/y/z when I switch maps. Once again, this would be easy in Q3, but not possible in QL, because all QL has is setviewpos, which only allows x/y/z/y/p/r, without v0,v1,v2, like Q3's placeplayer. It automatically sets my velocity to 400 in the direction I'm facing, just like a teleporter. So that isn't really viable.

My second option is to somehow run on my own custom, flat .bsp, and make sure that my jumps line up with the other maps. I could add some brushes for the major structural pieces (or in some cases, make holes, like on ALL THE MAPS because they're space maps). Then it's simple; I make a single camera path that goes through the "fake" map. I capture JUST the player model and any rockets/etc. that I use, because I expect to use at least some rocketjumps to go from map to map. Then I offset the camera so that all the maps fit beside eachother fine. This seems like it would require decimal-point precision in the camera placement, which means a LOT of testing, and tons of work that I don't really want to do, and is very unnecessary, because there's another step that would also have to be done, which is only slightly less monotonous: I would have to fix the leaf nodes that are stored inside the demo.

Q3MAP2 does this thing where, when compiling a .bsp, it generates little (or large) leafs all over the map. I don't know the specifics, but while inside one of these leafs, only what's inside it can be rendered. Everything outside is completely ignored; it isn't drawn, and it isn't rendered. It helps a lot with rendering efficiency, and probably with compression. I don't really know much about this, because I only took a quick look at hinting in Radiant, and that's all I did to look into the more advanced map stuff. The whole reason I brought this up is because these leafs are saved inside the demo, and the whole world disappears when you leave them. It's quite a predicament, and only matters when you try using bsps that don't belong (playing a demo on a map that it wasn't recorded on). Fixing this is a big problem, and yet another one I'd rather ignore.

The third (and final, as far as I know) way to fix this whole problem of running in-between maps, is to decompile all of the .bsps that I will be using, line them up, compile them, do my run, make the camera using this custom bsp, then offset it for each individual bsp, record only the player + related entities on my custom bsp, then each individual map, and then the sky in my own skybox bsp. This is really the least complicated way to go about this. It has quite a few more major steps than the first two solutions, but each one is a lot less time consuming, a lot more reasonable, and a lot easier.

The custom bsp will be a piece of shit with no lights, tons of misaligned textures, and broken entities/brushes. Decompiling is an imperfect technique, and can never be perfected. The reason being, the light entities themselves are ignored, while the lightmap is saved to the bsp. I don't know how unreasonable it would be to approximate the color, intensity, and position of the lights based on the lightmaps, but it seems like even a very inaccurate approximation would be better than nothing. So it would be stupid for me to use this bsp for anything else, is what I'm getting at.

I pretty much described every annoyance that I've thought might appear in this. There are some things, like chroma keying, that I'm horrible at, but that isn't even significant compared to the other problems I face with this.

The things I do keep getting more and more complicated, and I love it! It's so fun to play around with the game in this way. I don't just get a demo and upload it to youtube anymore. I actually do things now, and this I'm very happy about. I'll start working on this on Saturday, then on Monday, because I'm going to a convention on Sunday. My other trans-map air-rocket project has a higher priority than this one, though, so if someone sends me a demo while I'm in the middle of this, I'm saving my work and moving over to the other one. Unless I'm in the middle of rendering it or something.

That's all for now. I have a thing for writing about what I'm thinking, so these really stretch on for quite a bit.

Going through some code for no reason.

I tried to compile the ioQuake3 code again today. Unlike last time, several months ago, everything went relatively smoothly. I got the engine and DLLs working, though the binaries were almost double the size of the official ones. Apparently, that was to be expected, and there's no way for me to fix it (it can be fixed, but not by me, since there were no instructions in the guide).

Because I can now compile my code, I decided to go through it and play around. About 5 lines into "code\cgame\cg_playerstate.c", I was torn by just how much math is involved in this type of coding. I could get good at math again relatively easily, but as bad as I am now, I just gave up entirely after a few lines.

And that concludes that slightly anticlimactic story.

----

I originally had a point in this post, but forgot what it was as I started writing. I just remembered it!

The first part was to get a demo of a really nice LG carry. I'm talking, battlesuit + handicap. I'm talking, 200 LG cells. The kind of carry that is just unheard of. After I have my eye-popping, jaw-dropping demo, I play around with the LG beam. The idea is to make it wobble, like some sort of visualization or something, to whatever audio is being played. With this in mind, it's only natural that something with huge bass sections, like dubstep, be used. This is probably the only sort of instance where dubstep should be allowed in a Quake-related video. Anywhere else is only a couple steps away from complete and utter blasphemy.

So, how does this relate to "going through some code for no reason"? Well, I downloaded a mod called q3osc yesterday. It takes whatever entity on the map, and turns it into some sort of sound. It's like a sonicification of whatever you're seeing, as opposed to a visualization of what you're hearing. The code has been released so if I knew what I was doing at all, I could integrate parts of it into wolfcam/q3/whatever, so that a track could be set in the map/demo/whatever (when playing back a demo, the audio track is parsed from the demo, as opposed to the bsp itself, where it's originally read from), and certain entities would be affected by the mod. This, or a bind to play a track, or some message inside a snapshot somewhere in the demo, or an "at" command, could be used to play a track and create this effect inside the game. To me, this seems like an incredibly complicated thing to code, which makes it 100% off-limits to me. It's an idea, though, so maybe someone can try it. Who knows.

Another way I could go about screwing with the LG is in post processing, obviously. There are probably some effects or plugins or something in Adobe After Effects, Audition, or FL Studio. I'm pretty much as inexperienced as you can get with all three programs, so it would take a lot of fiddling and learning to get something like this working, but I know it's possible. I would have to consult with a lot of people as well, because pre-written guides can never be as helpful as someone's tutoring in real-time.

This, along with my trans-map air-rocket super-goodness, are the two most complicated edits I've thought of so far. And I will make them work!

Because I can now compile my code, I decided to go through it and play around. About 5 lines into "code\cgame\cg_playerstate.c", I was torn by just how much math is involved in this type of coding. I could get good at math again relatively easily, but as bad as I am now, I just gave up entirely after a few lines.

And that concludes that slightly anticlimactic story.

----

I originally had a point in this post, but forgot what it was as I started writing. I just remembered it!

The first part was to get a demo of a really nice LG carry. I'm talking, battlesuit + handicap. I'm talking, 200 LG cells. The kind of carry that is just unheard of. After I have my eye-popping, jaw-dropping demo, I play around with the LG beam. The idea is to make it wobble, like some sort of visualization or something, to whatever audio is being played. With this in mind, it's only natural that something with huge bass sections, like dubstep, be used. This is probably the only sort of instance where dubstep should be allowed in a Quake-related video. Anywhere else is only a couple steps away from complete and utter blasphemy.

So, how does this relate to "going through some code for no reason"? Well, I downloaded a mod called q3osc yesterday. It takes whatever entity on the map, and turns it into some sort of sound. It's like a sonicification of whatever you're seeing, as opposed to a visualization of what you're hearing. The code has been released so if I knew what I was doing at all, I could integrate parts of it into wolfcam/q3/whatever, so that a track could be set in the map/demo/whatever (when playing back a demo, the audio track is parsed from the demo, as opposed to the bsp itself, where it's originally read from), and certain entities would be affected by the mod. This, or a bind to play a track, or some message inside a snapshot somewhere in the demo, or an "at" command, could be used to play a track and create this effect inside the game. To me, this seems like an incredibly complicated thing to code, which makes it 100% off-limits to me. It's an idea, though, so maybe someone can try it. Who knows.

Another way I could go about screwing with the LG is in post processing, obviously. There are probably some effects or plugins or something in Adobe After Effects, Audition, or FL Studio. I'm pretty much as inexperienced as you can get with all three programs, so it would take a lot of fiddling and learning to get something like this working, but I know it's possible. I would have to consult with a lot of people as well, because pre-written guides can never be as helpful as someone's tutoring in real-time.

This, along with my trans-map air-rocket super-goodness, are the two most complicated edits I've thought of so far. And I will make them work!

Thursday, 13 October 2011

Step 1: complete.

I had forgotten just how efficient Carmack had made Quake 3. I read a bunch of his devblog stuff a few weeks ago, and he mentioned something about how entities such as rockets and plasma balls, once fired, are completely ignored by the game until something happens to them: either they hit something, or they disappear. The point of this was that, at the time, internet was so bad that it was necessary to save as much bandiwdth as possible, even 1kb. Adding the x/y/z co-ordinates of each rocket/plsama ball for every frame would drastically increase the size of each packet, and would even create huge lag on modern systems, with modern internet.

I was thinking that I would have to add info to every frame on the co-ordinates of the rocket, but it turns out that I just specify the origin and speed, and it lasts forever. Of course, in the original demo, it hit the end of the map and disappeared. That was a total of one line, though: remove="true". I cut that out, and the rocket would continue infinitely.

I only added a few 100ms (10fps) frames to the end of this demo, so that the rocket would continue for a while, then the demo would end. It turns out that, because the position of the rocket is calculated by the engine but ignored by the client/server protocol thingy, it never becomes choppy, no matter what framerate you have. It always transitions smoothly from frame to frame, whether the frames are 1ms or 1000ms apart. Of course, if you're running at 1fps, then a real game would be pretty fucked up. That was not the case here.

So, because this was so painfully easy and simple, I made a video. It took me about a minute, and I think it's kind-of cool.

here it is!

I was thinking that I would have to add info to every frame on the co-ordinates of the rocket, but it turns out that I just specify the origin and speed, and it lasts forever. Of course, in the original demo, it hit the end of the map and disappeared. That was a total of one line, though: remove="true". I cut that out, and the rocket would continue infinitely.

I only added a few 100ms (10fps) frames to the end of this demo, so that the rocket would continue for a while, then the demo would end. It turns out that, because the position of the rocket is calculated by the engine but ignored by the client/server protocol thingy, it never becomes choppy, no matter what framerate you have. It always transitions smoothly from frame to frame, whether the frames are 1ms or 1000ms apart. Of course, if you're running at 1fps, then a real game would be pretty fucked up. That was not the case here.

So, because this was so painfully easy and simple, I made a video. It took me about a minute, and I think it's kind-of cool.

here it is!

Airrocket-map transition

This is a new idea, with some similarities to my spacectf camera stuffs video, and probably was even inspired by it.

Basically, I will have someone shoot a rocket on map 1 and miss. The rocket will fly into space. That's the first demo.

The second demo is an airrocket that hits. They both have to be space maps, because the chances of getting an airrocket at the proper angle on a non-space map are VERY slim. If I did get one, it would be really nice, but I won't even try.

What I'm going to do should be fairly obvious by this point. I'm going to follow the rocket from map 1 into map 2. It will be very hard to do, for a number of reasons.

First challenge is getting the demos. The orientation of the maps can be whatever, because I can change the yaw, pitch, and roll of the cameras a full 360 degrees, so there's no problem there. A rocket that goes out the side of one map and comes in through the top/bottom of the other would look quite nice. But there's still a lot of problems. Demo 2 should have the rocket hit while the target is below the origin. This is because there are less obstructions on the top of the map than the bottom, which makes everything so much easier.

The second challenge is making the rocket actually go to the next map. Lining up the cameras is one thing (a huge thing, but still only one thing). If the second demo starts too far in the middle of the map, then the first rocket will hit the edge of the map long before the second rocket even starts moving. I'm fine with overlapping the maps a little bit, so long as there's no structural overlaps. If the second demo starts too far away from the edge of the map, I will have to somehow either extend the lifetime of the first rocket manually, so it leaves the map (manually, because that's how I roll), or just cut out the rocket and animate it on a different track or something. Both ways are fairly complicated, but the first would probably require a LOT more work. I'm not sure how much, because I've never played with entities other than players before.

The third challenge is just making everything work. Layering the tracks, keying the backgrounds, and putting the skies on. I honestly don't know what the most lengthy step of this will be, because this is all new to me. What I do know is that I can't do much until I get a demo. The first demo doesn't have to be a near miss; it can just be a rocket that goes into space. Because of that, I can record it myself in a couple seconds. And since this is the demo that requires modding, I'm able to start working on this first. The only reason I wouldn't start this immediately is that I don't know if I'm going to actually be doing this, or copying the animation in the video so it lasts longer. And I don't even know if modding the demo will be necessary, because I don't have the second demo yet.

My math is horrible, so I don't know how I'll be able to calculate the angles of the rocket. I'm tempted to send it straight at a 90 degree angle so there's no y/z motion, which would mean substantially easier math (instead of doing some kind of weird triangular division and highly complex stuff like that, I just add a couple units each frame. I think the QL servers run at 45fps, which means 22ms frame time. Something else I'm not 100% sure of is the current rocket speed. I'll have to look into that, but I don't think that will take long to figure out (it's probably 900 anyway).

----

This might as well be an OP for some stupid thread on ESR or the QL forums... 3600 characters doesn't seem very characteristic of a simple blog post. I guess I'm just that awesome.

Anyone bored enough to be reading this should look forward to this video, because it feels almost revolutionary. I finally get to put my ideas into real pixels! It feels so good being able to DO things! The only hindrances I have is content, which is hardly necessary for a lot of the things I do. Just a demo of me jumping around CTF4 was enough for my last project.

Basically, I will have someone shoot a rocket on map 1 and miss. The rocket will fly into space. That's the first demo.

The second demo is an airrocket that hits. They both have to be space maps, because the chances of getting an airrocket at the proper angle on a non-space map are VERY slim. If I did get one, it would be really nice, but I won't even try.

What I'm going to do should be fairly obvious by this point. I'm going to follow the rocket from map 1 into map 2. It will be very hard to do, for a number of reasons.

First challenge is getting the demos. The orientation of the maps can be whatever, because I can change the yaw, pitch, and roll of the cameras a full 360 degrees, so there's no problem there. A rocket that goes out the side of one map and comes in through the top/bottom of the other would look quite nice. But there's still a lot of problems. Demo 2 should have the rocket hit while the target is below the origin. This is because there are less obstructions on the top of the map than the bottom, which makes everything so much easier.

The second challenge is making the rocket actually go to the next map. Lining up the cameras is one thing (a huge thing, but still only one thing). If the second demo starts too far in the middle of the map, then the first rocket will hit the edge of the map long before the second rocket even starts moving. I'm fine with overlapping the maps a little bit, so long as there's no structural overlaps. If the second demo starts too far away from the edge of the map, I will have to somehow either extend the lifetime of the first rocket manually, so it leaves the map (manually, because that's how I roll), or just cut out the rocket and animate it on a different track or something. Both ways are fairly complicated, but the first would probably require a LOT more work. I'm not sure how much, because I've never played with entities other than players before.

The third challenge is just making everything work. Layering the tracks, keying the backgrounds, and putting the skies on. I honestly don't know what the most lengthy step of this will be, because this is all new to me. What I do know is that I can't do much until I get a demo. The first demo doesn't have to be a near miss; it can just be a rocket that goes into space. Because of that, I can record it myself in a couple seconds. And since this is the demo that requires modding, I'm able to start working on this first. The only reason I wouldn't start this immediately is that I don't know if I'm going to actually be doing this, or copying the animation in the video so it lasts longer. And I don't even know if modding the demo will be necessary, because I don't have the second demo yet.

My math is horrible, so I don't know how I'll be able to calculate the angles of the rocket. I'm tempted to send it straight at a 90 degree angle so there's no y/z motion, which would mean substantially easier math (instead of doing some kind of weird triangular division and highly complex stuff like that, I just add a couple units each frame. I think the QL servers run at 45fps, which means 22ms frame time. Something else I'm not 100% sure of is the current rocket speed. I'll have to look into that, but I don't think that will take long to figure out (it's probably 900 anyway).

----

This might as well be an OP for some stupid thread on ESR or the QL forums... 3600 characters doesn't seem very characteristic of a simple blog post. I guess I'm just that awesome.

Anyone bored enough to be reading this should look forward to this video, because it feels almost revolutionary. I finally get to put my ideas into real pixels! It feels so good being able to DO things! The only hindrances I have is content, which is hardly necessary for a lot of the things I do. Just a demo of me jumping around CTF4 was enough for my last project.

Keel rocketjump

I was going to make an entry for an animeseed background contest, since I had an idea of what to make. It's Halloween themed, so I figured I would just use some orange/black/grey colors and pass it off. It turned out sort-of nice, but not what I originally wanted.

The main problem with this is the aliasing. I have no graphics card, so I can't take super high-res pictures and downscale them, or take normal images with high AA. I took this screenshot at 1600x900 and upscaled, without using any blur or post-process AA. The result is a horribly aliased piece of crap.

Thinking of adding a bit of blur around the edges to remove some aliasing, but I'm not sure if I really want to spend the time on this. It failed pretty hard anyway.

So I decided to try and anti-alias this image. First, I blurred it a bit using a couple different types in PS. That was a bit better, but because the image was upscaled as much as it was, the aliasing was more a part of the whole shape than a little bit of imperfections here and there. This means that a simple blur wouldn't do the trick.

Because this is just a silhouette, I decided that it wouldn't be that big a deal to just go around it with the eraser tool and do it all myself. I believe that, if you want something done right, you should let a machine do it. But I'm not nearly smart enough to listen to my own advice, so I do everything manually.

This is what you get when you do everything yourself: half an hour of time wasted, and an image with imperfect anti-aliasing. There are still some deformations visible (such as on the outside of the left leg), but overall, it's probably better than the original. So there xP

You can download the image here (2560x1440)

The main problem with this is the aliasing. I have no graphics card, so I can't take super high-res pictures and downscale them, or take normal images with high AA. I took this screenshot at 1600x900 and upscaled, without using any blur or post-process AA. The result is a horribly aliased piece of crap.

Thinking of adding a bit of blur around the edges to remove some aliasing, but I'm not sure if I really want to spend the time on this. It failed pretty hard anyway.

So I decided to try and anti-alias this image. First, I blurred it a bit using a couple different types in PS. That was a bit better, but because the image was upscaled as much as it was, the aliasing was more a part of the whole shape than a little bit of imperfections here and there. This means that a simple blur wouldn't do the trick.

Because this is just a silhouette, I decided that it wouldn't be that big a deal to just go around it with the eraser tool and do it all myself. I believe that, if you want something done right, you should let a machine do it. But I'm not nearly smart enough to listen to my own advice, so I do everything manually.

This is what you get when you do everything yourself: half an hour of time wasted, and an image with imperfect anti-aliasing. There are still some deformations visible (such as on the outside of the left leg), but overall, it's probably better than the original. So there xP

You can download the image here (2560x1440)

Wednesday, 12 October 2011

Bitmap artifacting sucks

I just worked on a bitmap image for about half an hour, touching up the artifacts all over the place. It's such a pain in the ass to do.. I wish more people used higher quality jpg outputs, or used a different format that has better compression (or less compression; I would rather have a large file than an ugly image).

Anyway, here it is:

It's not really possible to see the artifacting when zoomed out, but as soon as you zoom in to something more than 75%, it becomes really obvious.

Anyway, it's been done, and it was fun. Sort-of. A little bit.

Anyway, here it is:

It's not really possible to see the artifacting when zoomed out, but as soon as you zoom in to something more than 75%, it becomes really obvious.

Anyway, it's been done, and it was fun. Sort-of. A little bit.

COLORS! Well... shades

I tried to mimic the WolfcamQL Wiki's color scheme as closely as I could, because Wolfcam is what I do, so some sort of unity is nice, even though nobody is reading this. Or the WC Wiki, for that matter.. :(

Oh well. It was sort-of fun to get colored properly. As usual, I did some sort of weird, roundabout process to get everything the way I wanted it to be. I'm not sure if my ways are even more reliable than any kind of automation, but I feel good when I write 25000 characters myself, rather than let some ~100-line piece of code do it all for me. Where's the fun in that?

Oh well. It was sort-of fun to get colored properly. As usual, I did some sort of weird, roundabout process to get everything the way I wanted it to be. I'm not sure if my ways are even more reliable than any kind of automation, but I feel good when I write 25000 characters myself, rather than let some ~100-line piece of code do it all for me. Where's the fun in that?

Normalized spacectf (cont.)

Normalized spacectf

I'm going to be retexturing CTF4 with normalized textures. I doubt this will really work how I want it, but I want to do this anyway. There are only a couple dozen textures to do, plus some simple layering. It's not as fruitless or boring as my stupid CJ demo thing that I gave up on 5 minutes in.

I should be finished the textures within an hour or so, which means I'll finish this before I go to bed. Hopefully.

The way I got a texturelist for CTF4 was actually somewhat funny. I opened the .bsp in a hex editor, did a find -> replace for all "00" hex codes, then opened it in notepad++ and removed all the other excess. Then I just made a list out of the remainder, which was the textures and locations of map entities. There are probably programs that automate this (I'm positive there are), but I do everything manually like a dumbass, so there you go.

I should be finished the textures within an hour or so, which means I'll finish this before I go to bed. Hopefully.

The way I got a texturelist for CTF4 was actually somewhat funny. I opened the .bsp in a hex editor, did a find -> replace for all "00" hex codes, then opened it in notepad++ and removed all the other excess. Then I just made a list out of the remainder, which was the textures and locations of map entities. There are probably programs that automate this (I'm positive there are), but I do everything manually like a dumbass, so there you go.

CJ Visualizations (cont.)

Probably going to give up on this, since it's really a complete waste of time. There are hundreds of frames, and I have to manually add camera points for each and every one. It takes forever, and the result is a simple screenshot. It might be helpful, not really not worth the time. Maybe I could automate it somehow, even with my complete lack of coding knowledge...

Doing some weird visualizations for CJ demos

I'm using Camtrace 3D to visualize every other frame inside a circle jump demo so I can easily see what angles were used, how big the arc is, and a number of other things. If I knew how to code, I could make something specifically for this (I'm sure it's already been done, and there are screenshots out there. <hk> probably has some), but I'm a nub so I'm doing it this way.

It's interesting though; every time I open a demo, I learn something new, which is totally worth it. I can blindly rewrite a demo, fix a few things, remove useless info, and make it generally "better". Of course, since I would be doing this all manually, I can't do it for long demos; only ones that are a couple dozen frames long, which makes it almost useless, but whatever. Eventually I'll learn some sort of coding language and automate these processes, so that they can actually be used by someone, somewhere.

Of course, my timescale fix will be my own until someone else figures it out. I want to test other peoples' DeFRaG anti-cheat systems with it. If I supplied the original timescaled demo and the fix, it would be too easy to figure out how it works, though, so I have to be careful what I show to who.

That concludes my "first" blog entry. I wonder if this will become boring as fast as everything else I do? I remind me of Lloyd Irving; I become incredibly enthusiastic about something, then after a few minutes, I become bored and find something else to occupy me. I also ramble.

It's interesting though; every time I open a demo, I learn something new, which is totally worth it. I can blindly rewrite a demo, fix a few things, remove useless info, and make it generally "better". Of course, since I would be doing this all manually, I can't do it for long demos; only ones that are a couple dozen frames long, which makes it almost useless, but whatever. Eventually I'll learn some sort of coding language and automate these processes, so that they can actually be used by someone, somewhere.

Of course, my timescale fix will be my own until someone else figures it out. I want to test other peoples' DeFRaG anti-cheat systems with it. If I supplied the original timescaled demo and the fix, it would be too easy to figure out how it works, though, so I have to be careful what I show to who.

That concludes my "first" blog entry. I wonder if this will become boring as fast as everything else I do? I remind me of Lloyd Irving; I become incredibly enthusiastic about something, then after a few minutes, I become bored and find something else to occupy me. I also ramble.

Subscribe to:

Comments (Atom)